Devlog 10 - Latency Calibration

Published: August 6th, 2021

Along with fleshing out a bunch of the settings menus, this week I worked on one of my long-standing high-priority items: a reworked latency calibration screen.

As mentioned briefly in a previous devlog, audio output always has some amount of latency/delay on every device and it's important that we be able to measure this so that we can "queue up" sound/music in advance to account for this delay. Unfortunately, the exact latency amount is different from device to device, so there is no universal measurement that works (plus, different players may perceive latency differently due to psychoacoustics, etc). Thus, we need to build some sort of latency calibration system for the player to be able to easily adjust this for themselves.

Types of Latency

Actually, there are three separate types of latency that are relevant to us for synchronizing music with gameplay: audio latency, visual latency, and input latency.

Audio latency is the delay between playing a sound and when the sound is actually able to be heard. This is caused by various audio buffering systems, mixing delays, hardware/engine limitations, bluetooth headphone transmission time, the time it takes for sound to travel through the air, etc.

Visual latency is the delay between rendering an image and when that image is actually able to be seen. This is caused by double-buffering/rendering queue systems, monitor refresh/update characteristics, etc.

Input latency is the delay between a player performing an input and when that input is actually able to be handled by the game. This is caused by input debouncing / processing delays, frame-based input handling, wireless controller transmission times, other stuff in the engine, etc.

Trying to minimize these latencies usually involves adjusting various engine settings, and past that, going low-level and bypassing engine functionality entirely to interface directly with the low-level platform APIs. For example, bypassing Unity's audio mixing and input processing systems will result in much lower latencies...but of course you lose out on those features (unless you re-implement them yourself).

Note that usually, audio latency is the largest of the three latencies. (This is especially true on Android devices which are notorious for having high amounts of audio latency) Input and video latency are already optimized for most other games: if pressing a button does not result in an immediate visual feedback, games feel very unresponsive. And these systems do not require the same sort of mixing and buffering systems that audio does. (One notable exception to this generalization would be when playing on a video projector or something like that.)

Measuring Latency

The standard way to measure and adjust for latency is through some sort of tap test (tap to the beat, or tap to the visual indicator), or by adjusting a video/audio offset.

Unfortunately, we can never measure a single type of latency by itself using a tap test. Having a user tap to an audio signal will give you the sum of audio latency + input latency. Similarly, having an user tap to a visual signal will give you the sum of video latency + input latency. Subtracting these from each other should in theory give you an audio / video offset value.

Depending on the exact needs of your game, there are a couple of different ways that you can set up calibration measurements.

Examples

The system of "audio tap test, video tap test" measurements described above definitely isn't the only way to set up a calibration system.

Rhythm Doctor (a very nice one-button rhythm game!) splits calibration into two phases. In the first phase the user adjusts the video/audio offset so that both are synchronized:

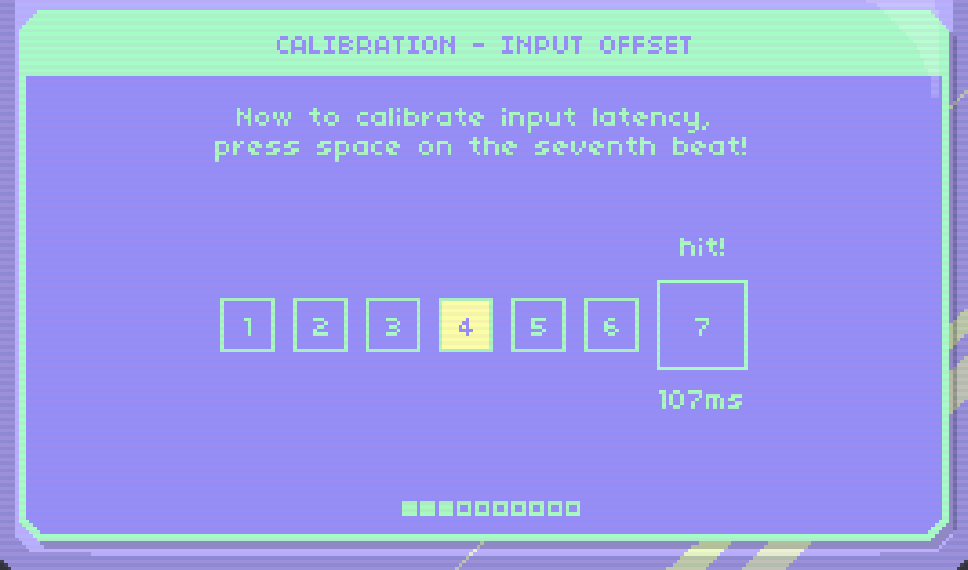

In the second calibration phase, the audio/video sync has already been established, so all that's left is to determine input latency via a tap test:

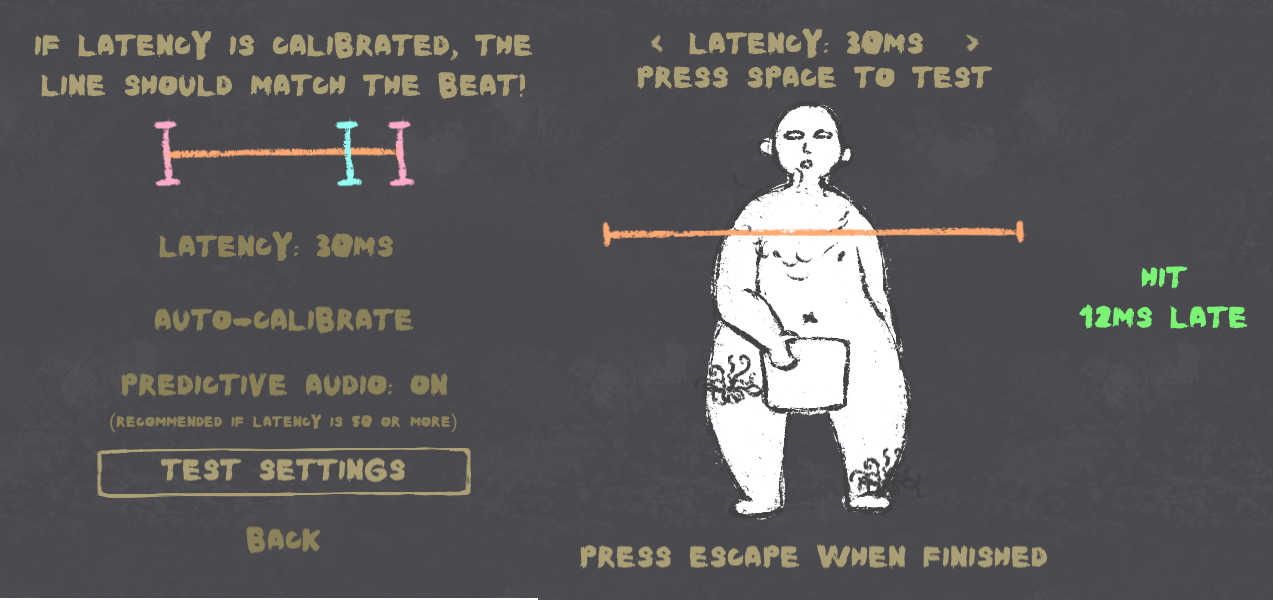

It's worth noting that this "seventh beat tap test" mirrors the actual gameplay of the rest of the game, so it's very representative and should therefore hopefully be accurate. I tried to do the same thing in Samurai Shaver -- I provide a "test scene" where you can simulate actual gameplay and adjust latency to get immediate feedback on whether your calibration is working out:

Assumptions and Restrictions for Rhythm Quest

Rhythm Quest isn't a normal "judgment-based" rhythm game like Dance Dance Revolution, or Guitar Hero, or Arcaea, or whatever. In particular, my ability to account for visual + input latency is minimal.

In traditional rhythm games, the judgment for a note can be delayed until a bit after the note has passed. When playing Guitar Hero on a calibrated setup with a lot of visual latency, for example, you hit each note as it "appears" to cross the guideline, but the note doesn't actually respond as being hit until it's travelled significantly past that point.

That doesn't work as well for Rhythm Quest:

High amounts of input and visual latency can really throw off the game, to the point where normally a respawn would be triggered. Jumping is a good example of this -- in order to properly account for 100ms of input latency, by the time my code receives the jump button press, you ought to already be partway through your jump!

For playing sound effects, I can work around this kind of thing just fine. Rhythm Quest (by default) preschedules hit and jump sounds at the correct times, so even with significant audio latency, they will play at the appropriate timing. Note that this also means that even if you don't press anything and miss the hit, the correct sound will still play. While this is not 100% ideal, this is an effective compromise that needed to be made in order for sfx timing to be accurate. (this technique is used in other games as well)

But for visuals this doesn't work as well. If I "preschedule visual effects" then I'd have to play an animation of you slashing an enemy, only to find out 100 milliseconds later that you never actually pressed a button. "Rewinding" that visual state would be incredibly jarring. Similarly, if I tried to account for jump input latency by starting all jumps 100ms in, the jumping animation would look very jerky on startup.

Given this, the solution I've chosen to go with is to assume that visual + input latency is relatively minimal and trust the player to compensate for it themselves. In fact, input delay is something present in pretty much all games to some extent, and even competitive fighting games usually frequently deal with 50-100ms of lag. Most people should hopefully adapt and adjust naturally to this sort of latency based on visual and auditory feedback.

The calibration in Rhythm Quest is thus primarily focused on determining the best value for audio vs video offset.

Putting It into Practice

Here's a video of the single-screen latency calibration system that I've built out so far:

It's a bit busy (actual UI still not final), but the basic concept works out pretty nicely. This screen is sort of a combination tap test plus user-driven audio/video offset calibration. The top section allows the user to tap in order to establish a rhythm, and the bottom section lets the user adjust the audio offset accordingly.

The end result is very immediate in that it should be very easy to tell by eye when calibration looks "locked in". (the UI will also highlight the appropriate button if it's obvious that an adjustment needs to be made) The process is also relatively quick and doesn't require you to spend a full minute tapping to a sound 16 times or whatever, which is something I'm trying to avoid, as it causes friction for people who just want to jump into the game.

The design of this screen leverages the fact that the human eye is very keen at determining slight differences in timing between two flashing objects (i.e. "which of these two squares flashed first"). I actually only keep 4 tap samples (one for each square), which is very low for a tap test (usually you'd take 10 or so and throw out the min and max). However, I can get away with this because it is immediately obvious (by sight) if your taps were inconsistent in a notable way.

Note that it's very important that the button tap here does NOT play sounds, as those sounds would of course be scheduled with latency, and throw off the user.

The exact design of this screen will probably need to be tweaked (I probably need to hide the bottom row at first...), but so far I'm liking it much better than the standard "sit here and tap a button 10 times" design. I'm hoping that this will allow people to spend less time on this and jump into the game more quickly.

<< Back: Devlog 9 - Settings Menus

>> Next: Devlog 11 - Odds and Ends